Introduction

Adaboostregressor 高速化, or “Adaboostregressor acceleration,” is a crucial aspect of optimizing machine learning algorithms for better performance. Adaboost, short for Adaptive Boosting, is a powerful ensemble technique that combines multiple weak learners to create a robust predictive model. When dealing with large datasets or complex tasks, speeding up Adaboostregressor can significantly enhance computational efficiency and model performance.

Understanding Adaboostregressor

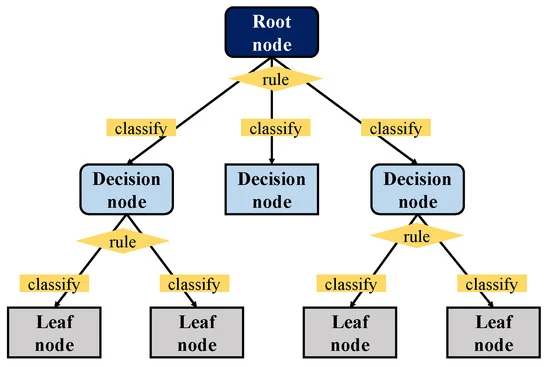

Adaboostregressor is an extension of the Adaboost algorithm specifically designed for regression tasks. It works by sequentially fitting weak learners to the data, where each subsequent learner focuses on the errors made by its predecessor. This process helps in refining the model’s predictions. However, as data size and complexity increase, the computational cost can become a bottleneck. This is where Adaboostregressor 高速化 comes into play.

Key Techniques for Adaboostregressor 高速化

To achieve Adaboostregressor 高速化, various strategies can be employed to enhance the algorithm’s speed and efficiency:

Efficient Data Handling

One of the primary methods to accelerate Adaboostregressor is through efficient data handling. Reducing the dimensionality of the data using techniques such as Principal Component Analysis (PCA) can decrease computational overhead. Additionally, data preprocessing steps like normalization and feature scaling can improve the algorithm’s convergence speed.

Optimized Algorithms

Utilizing optimized versions of Adaboostregressor can also lead to faster computations. For instance, algorithms that implement parallel processing or GPU acceleration can significantly reduce the time required for model training. Libraries such as XGBoost or LightGBM offer implementations that are highly optimized for speed.

Reduced Complexity Models

Simplifying the base models used in Adaboostregressor can contribute to faster processing. While complex models may provide better accuracy, they can also increase the time required for training. Opting for simpler models can strike a balance between performance and speed, leading to improved efficiency.

Hyperparameter Tuning

Properly tuning the hyperparameters of Adaboostregressor can result in faster convergence and better performance. Key hyperparameters include the number of estimators, learning rate, and maximum depth of the base models. Experimenting with these parameters can help in finding the optimal settings for speed and accuracy.

Parallel Processing and Distributed Computing

Leveraging parallel processing and distributed computing can significantly accelerate Adaboostregressor. By dividing the workload across multiple processors or machines, the training process can be completed more quickly. This approach is particularly useful for large-scale datasets and complex models.

Practical Applications of Adaboostregressor 高速化

In practical scenarios, Adaboostregressor 高速化 can be applied to various domains to improve performance:

Finance

In financial forecasting, rapid and accurate predictions are crucial. Accelerating Adaboostregressor can lead to faster insights and better decision-making in areas such as stock price prediction and risk assessment.

Healthcare

In healthcare, timely analysis of patient data can be lifesaving. Speeding up Adaboostregressor can enhance predictive models for disease diagnosis and treatment recommendations, ultimately improving patient outcomes.

Marketing

Marketing strategies often rely on analyzing large volumes of customer data. Accelerating Adaboostregressor can provide faster insights into customer behavior, enabling more effective targeted marketing campaigns and better ROI.

Conclusion

Adaboostregressor 高速化 is a vital aspect of optimizing machine learning models for better performance and efficiency. By employing techniques such as efficient data handling, optimized algorithms, reduced complexity models, hyperparameter tuning, and parallel processing, one can significantly accelerate the Adaboostregressor algorithm. This not only improves the computational speed but also enhances the overall performance of predictive models across various applications.

FAQs

What is Adaboostregressor?

Adaboostregressor is an ensemble learning technique used for regression tasks. It combines multiple weak learners to create a robust model that improves prediction accuracy.

How can I speed up Adaboostregressor?

You can speed up Adaboostregressor by using efficient data handling, optimized algorithms, simpler base models, hyperparameter tuning, and parallel processing.

Why is Adaboostregressor 高速化 important?

Adaboostregressor 高速化 is important because it enhances the computational efficiency of the algorithm, leading to faster model training and better performance, especially with large datasets.

What are some tools for optimizing Adaboostregressor?

Tools such as XGBoost and LightGBM offer optimized implementations of boosting algorithms that can accelerate Adaboostregressor.

Can Adaboostregressor 高速化 be applied to all types of data?

Yes, Adaboostregressor 高速化 techniques can be applied to various types of data, including financial, healthcare, and marketing datasets, to improve model performance and speed.